Home » Artificial Intelligence [AI]

Category Archives: Artificial Intelligence [AI]

Is it time to unleash your criminological imagination?

In this blog entry, I am going to introduce a seemingly disconnected set of ideas. I say seemingly, because at the end, all will hopefully make sense. I suspect the following also demonstrates the often chaotic and convoluted process of my thought processes.

I’ve written many times before about Criminology, at times questioning whether I have any claim to the title criminologist and more recently, what those with the title should talk about. These come on top of hundreds of hours of study, contemplation and reflection which provides the backdrop for why I keep questioning the discipline and my place within it. I know one of the biggest issues for me is social sciences, like Criminology and many others, love to categorise people in lots of different ways: class, race, gender, offender, survivor, victim and so on. But people, including me, don’t like to be put in boxes, we’re complex animals and as I always tell students, people are bloody awkward, including ourselves! There is also a far more challenging issue of being part of a discipline which has the potential to cause, rather than reduce or remove harm, another topic I’ve blogged on before.

It’s no secret that universities across the UK and further afield are facing many serious, seemingly intractable challenges. In the UK these range from financial pressures (both institutional and individual), austerity measures, the seemingly unstoppable rise of technology and the implicit (or explicit, depending on standpoint) message of Brexit, that the country is closed to outsiders. Each of the challenges mentioned above seem to me to be anti-education, rather than designed to expand and share knowledge, they close down essential dialogue. Many years ago, a student studying in the UK from mainland Europe on the Erasmus scheme, said to me that our facilities were wonderful, and they were amazed by the readily available access to IT, both far superior to what was available to them in their own country. Gratifying to hear, but what came next was far more profound, they said that all a serious student really need is books, a enthusiastic and knowledgeable teacher and a tree to sit under. Whilst the tree to sit under might not work in the UK with our unpredictable weather, the rest struck a chord.

The world seems in chaos and war-mongers everywhere are clamouring for violence. Recent events in Darfur, Palestine, Sudan, Ukraine, Venezuela and many other parts of the world, demonstrate the frailty, or even, fallacy of international law, something Drs @manosdaskalou, @paulsquaredd and @aysheaobrien1ca0bcf715 have all eloquently blogged about. But while these discussions are important and pertinent, they cannot address the immediate harm caused to individuals and populations facing these many, varied forms of violence. Furthermore, whilst it’s been over 80 years since Raphäel Lemkin first coined the term ‘genocide’, it seems world leaders are content to debate whether this situation or that situation fits the definition. But, surely these discussions should be secondary, a humanitarian response is far more urgent. After all, (one would hope) that the police would not standby watching as one person killed another, all whilst having a discussion around the definition of murder and whether it applied in this context.

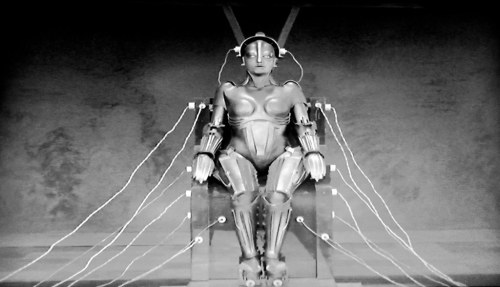

The rise of technology, in particular Generative Artificial Intelligence, has been the focus of blogs from Drs @sallekmusa, @5teveh and myself, each with their own perspective and standpoint. Efforts to combat the harmful effects of Grok enabling the creation of non-consensual pornographic images demonstrate both new forms of Violence Against Women and Girls [VAWG] and the limitations of control and enforcement. Whilst countries are rushing to ban Grok and control the access of social media for children under 16, it is clear that Grok and X are just one form of GAI and social media, there is seemingly nothing to stop others taking their place. And as everyone is well aware, laws are broken on a daily basis (just look at the court backlog and the overflowing prisons) and with no apparent way of controlling children’s access to technology (something which is actively encouraged in schools, colleges and universities) these attempts seem doomed to fail. Maybe more regulation. more legislation isn’t the answer to this problem.

Above I have briefly discussed four seemingly intractable problems. In each arena, we have many thousands of people across the globe trying to solve the issues, but the problems still remain. Perhaps we should ask ourselves the following questions:

- Maybe we are asking the wrong people to come up with the answers?

- Maybe we are constraining discussions and closing down debate?

- Maybe by allowing the established and the powerful to control the narrative we just continue to recycle the same problems and the same hackneyed solutions?

What if there’s another way?

And here we come to the crux of this blog, in Criminology we are challenged to explore any problem from all perspectives, we are continually encouraged to imagine a different world, what ought or could be a better place for all. I have the privilege of running two modules, one at level 4 Imagining Crime and one at Level 6 Violence. In both of these students work together to see the world differently, to imagine a world without violence, a world in which justice is a constant and reflection a continual practice. Walking into one of these classrooms you may well be surprised to see how thoughtful and passionate people can be when faced with a seemingly unsolvable problem when everything on the table is up for discussion. Although often misattributed to Einstein, the statement ‘Insanity is doing the same thing over and over again and expecting different results’ seems apposite. If we want the world to be different, we have to allow people to think about things differently, in free and safe spaces, so they can consider all perspectives, and that is where Criminology comes in.

Be fearless and unleash your criminological imagination, who knows where it might take you!

25 years is but a drop in time!

If I was a Roman, I would be sitting in my comfortable triclinium eating sweet grapes and dictating my thoughts to a scribe. It was the Roman custom of celebrating a double-faced god that started European celebrations for a new year. It was meant to be a time of reflection, contemplation and future resolutions. It is under these sentiments that I shall be looking back over the year to make my final calculations. Luckily, I am not Roman, but I am mindful that over 2025 years have passed and many people, have tried to look back. Since I am not any of these people, I am going to look into the future instead.

In 25 years from now we shall be heading to the middle of the 21st century. A century that comes with great challenges. Since the start of the century there has been talk of economic bust. The banking crisis slowed down the economy and decreased real income for people. Then the expectation was that crime will rise as it did before; whilst the juries may still be out. the consensus is that this crime spree did not come…at least not as expected. People became angry and their anger was translated in changes on the political map, as many countries moved to the right.

Prediction 1: This political shift to the right in the next 25 years will intensify and increase the polarisation. As politics thrives in opposition, a new left will emerge to challenge the populist right. Their perspective will bring another focus on previous divisions such as class. Only on this occasion class could take a different perspective. The importance of this clash will define the second half of the 21st century when people will try to recalibrate human rights across the planet. Globalisation has brought unspeakable wealth to few people. The globalisation of citizenship will challenge this wealth and make demands on future gains.

As I write these notes my laptop is trying to predict what I will say and put a couple of words ahead of me. Unfortunately, most times I do not go with its suggestions. As I humanise my device, I feel sorry for its inability to offer me the right words and sometimes I use the word as to acknowledge its help but afterwards I delete it. My relationship with technology is arguably limited but I do wonder what will happen in 25 years from now. We have been talking about using AI for medical research, vaccines, space industry and even the environment. However currently the biggest concern is not AI research, but AI generated indecent images!

Prediction 2: Ai is becoming a platform that we hope will expand human knowledge at levels that we could have not previously anticipated. One of its limitations comes from us. Our biology cannot receive the volume of information created and there is no current interface that can sustain it. This ultimately will lead to a divide between people. Those who will be in favour of incorporating more technology into their lives and those who will ultimately reject it. The polarisation of politics could contribute to this divide as well. As AI will become more personal and intrusive the more the calls will be made to regulate. Under the current framework to fully regulate it seems rather impossible so it will lead to an outright rejection or a complete embrace. We have seen similar divides in the past during modernity; so, this is not a novel divide. What will make it more challenging now is the control it can hold into everyday life. It is difficult to predict what will be the long-term effects of this.

During the late 20th and early 21st centuries drug abuse and trafficking seemed to continue to scandalise the public and maintain attention as much as it did back in the 1970s and 80s. Drugs have been demonised and became the topic of media representation of countless moral panics. Its reach in the public is wide and its emotional effect rivals only that of child abuse. Is drugs abuse an issue we shall be considering in 25 years from now?

Prediction 3: People used substances as far back as we can record history. Therefore, there will be drugs in the future to the joy of all these people who like to get high! It is most likely that the focus will be on synthetic drugs that will be more focused on their effects and how they impact people. The production is likely to change with printers being able to develop new substances on a massive scale. These will create a new supply line among those who own technology to develop new synthetic forms and those who own the networks of supply. In previous times a takeover did happen so it is likely to happen again, unless these new drugs emerge under formal monopolies, like drug companies who will legalise their recreative use.

One of the biggest tensions in recent years is the possibility of another war. Several European politicians have already raised it pretending to be making predictions. Their statements however are clear signs of war preparation. The language is reminiscent of previous eras and the way society is responding to these seems that there is some fertile ground. Nationalism is the shelter of every failed politician who promises the world and delivers nothing. Whether a citizen in Europe (EU/UK) the US or elsewhere, they have likely to have been subjected to promises of gaining things, better days coming, making things great…. only to discover all these were empty vacant words. Nothing has been offered and, in most cases, working people have found that their real incomes have shrunk. This is when a charlatan will use nationalism to push people into hating other people as the solution to their problems.

Prediction 4: Unfortunately, wars seem to happen regularly in human history despite their destructive nature. We also forget that war has never stopped and elusive peace happens only in parts of the world when different interests converge. There is a combination of patriotism, national pride and rhetoric that makes people overlook how damaging war is. It is awfully blindsided not to recognise the harm war can do to them and to their own families. War is awful and destroys working people the most. In the 20th century nuclear armament led to peace hanging by a thread. This fear stupidly is being played down by fraudsters pretending to be politicians. Currently the talk about hybrid war or proxy war are used to sanitise current conflicts. The use of drones seems to have altered the methodology of war, and the big question for the next 25 years is, will there be someone who will press THAT button? I am not sure if that will be necessary because irrespective of the method, war leaves deep wounds behind.

In recent years the discussion about the weather have brought a more prevailing question. What about the environment? There is a recognised crisis that globally we seem unable to tackle, and many make already quite bleak predictions about it. Decades ago, Habermas was exploring the idea of “colonization of the lifeworld” purporting that systemic industrial agriculture will lead to environmental degradation. Now it seems that this form of farming, the greenhouse gasses and deforestation are becoming the contributing factors of global warming. The inaction or the lack of international coordination has led calls for immediate action. Groups that have been formed to pressure political indecision have been met with resistance and suspicion, but ultimately the problem remains.

Prediction 5: The world acts when confronted with something eminent. In the future some catastrophic events are likely to shape views and change attitudes. Unfortunately, the planet runs on celestial and not human time. When a prospective major event happens, no one can predict its extent or its impact. The approach by some super-rich to travel to another planet or develop something in space is merely laughable but it is also a clear demonstration why wealth cannot be in the hands of few oligarchs. Life existed before them and hopefully it will continue well beyond them. On the environment I am hopeful that people’s views will change so by the end of this century we will look at the practices of people like me and despair.

These are mere predictions of someone who sits in a chair having read the news of the day. They carry no weight and hold no substantive strength. There is a recognition that things will change at some level and we shall be asked to adapt to whatever new conditions we are faced with. In 25 years from now we will still be asking similar questions people asked 100 years ago. Whatever happens, however it happens, life always finds a way to continue.

Technology: one step forward and two steps back

I read my colleague @paulaabowles’s blog last week with amusement. Whilst the blog focussed on AI and notions of human efficiency, it resonated with me on so many different levels. Nightmarish memories of the three E’s (economy, effectiveness and efficiency) under the banner of New Public Management (NPM) from the latter end of the last century came flooding back, juxtaposed with the introduction of so-called time saving technology from around the same time. It seems we are destined to relive the same problems and issues time and time again both in our private and personal lives, although the two seem to increasingly morph into one, as technology companies come up with new ways of integration and seamless working and organisations continuously strive to become more efficient with little regard to the human cost.

Paula’s point though was about being human and what that means in a learning environment and elsewhere when technology encroaches on how we do things and more importantly why we do them. I, like a number of like-minded people are frustrated by the need to rush into using the new shiny technology with little consideration of the consequences. Let me share a few examples, drawn from observation and experience, to illustrate what I mean.

I went into a well-known coffee shop the other day; in fact, I go into the coffee shop quite often. I ordered my usual coffee and my wife’s coffee, a black Americano, three quarters full. Perhaps a little pedantic or odd but the three quarters full makes the Americano a little stronger and has the added advantage of avoiding spillage (usually by me as I carry the tray). Served by one of the staff, I listened in bemusement as she had a conversation with a colleague and spoke to a customer in the drive through on her headset, all whilst taking my order. Three conversations at once. One full, not three quarters full, black Americano later coupled with ‘a what else was it you ordered’, tended to suggest that my order was not given the full concentration it deserved. So, whilst speaking to three people at once might seem efficient, it turns out not to be. It might save on staff, and it might save money, but it makes for poor service. I’m not blaming the young lady that served me, after all, she has no choice in how technology is used. I do feel sorry for her as she must have a very jumbled head at the end of the day.

On the same day, I got on a bus and attempted to pay the fare with my phone. It is supposed to be easy, but no, I held up the queue for some minutes getting increasingly frustrated with a phone that kept freezing. The bus driver said lots of people were having trouble, something to do with the heat. But to be honest, my experience of tap and go, is tap and tap and tap again as various bits of technology fail to work. The phone won’t open, it won’t recognise my fingerprint, it won’t talk to the reader, the reader won’t talk to it. The only talking is me cursing the damn thing. The return journey was a lot easier, the bus driver let everyone on without payment because his machine had stopped working. Wasn’t cash so much easier?

I remember the introduction of computers (PCs) into the office environment. It was supposed to make everything easier, make everyone more efficient. All it seemed to do was tie everyone to the desk and result in redundancies as the professionals, took over the administrative tasks. After all, why have a typing pool when everyone can type their own reports and letters (letters were replaced by endless, meaningless far from efficient, emails). Efficient, well not really when you consider how much money a professional person is being paid to spend a significant part of their time doing administrative tasks. Effective, no, I’m not spending the time I should be on the role I was employed to do. Economic, well on paper, fewer wages and a balance sheet provided by external consultants that show savings. New technology, different era, different organisations but the same experiences are repeated everywhere. In my old job, they set up a bureaucracy task force to solve the problem of too much time spent on administrative tasks, but rather than look at technology, the task force suggested more technology. Technology to solve a technologically induced problem, bonkers.

But most concerning is not how technology fails us quite often, nor how it is less efficient than it was promised to be, but how it is shaping our ability to recall things, to do the mundane but important things and how it stunts our ability to learn, how it impacts on us being human. We should be concerned that technology provides the answers to many questions, not always the right answers mind you, but in doing so it takes away our ability to enquire, critique and reason as we simply take the easy route to a ready-made solution. I can ask AI to provide me with a story, and it will make one up for me, but where is the human element? Where is my imagination, where do I draw on my experiences and my emotions? In fact, why do I exist? I wonder whether in human endeavour, as we allow technology to encroach into our lives more and more, we are not actually progressing at all as humans, but rather going backwards both emotionally and intellectually. Won’t be long now before some android somewhere asks the question, why do humans exist?

How to make a more efficient academic

Against a backdrop of successive governments’ ideology of austerity, the increasing availability of generative Artificial Intelligence [AI] has made ‘efficiency’ the top of institutional to-do-lists’. But what does efficiency and its synonym, inefficiency look like? Who decides what is efficient and inefficient? As always a dictionary is a good place to start, and google promptly advises me on the definition, along with some examples of usage.

The definition is relatively straightforward, but note it states ‘minimum wasted effort of expense’, not no waste at all. Nonetheless the dictionary does not tell us how efficiency should be measured or who should do that measuring. Neither does it tell us what full efficiency might look like, given the dictionary’s acknowledgement that there will still be time or resources wasted. Let’s explore further….

When I was a child, feeling bored, my lovely nan used to remind me of the story of James Watt and the boiling kettle and that of Robert the Bruce and the spider. The first to remind me that being bored is just a state of mind, use the time to look around and pay attention. I wouldn’t be able to design the steam engine (that invention predated me by some centuries!) but who knows what I might learn or think about. After all many millions of kettles had boiled and he was the only one (supposedly) to use that knowledge to improve the Newcomen engine. The second apocryphal tale retold by my nan, was to stress the importance of perseverance as essential for achievement. This, accompanied by the well-worn proverb, that like Bruce’s spider, if at first you don’t succeed, try, try again. But what does this nostalgic detour have to do with efficiency? I will argue, plenty!

Whilst it may be possible to make many tasks more efficient, just imagine what life would be like without the washing machine, the car, the aeroplane, these things are dependent on many factors. For instance, without the ready availability of washing powder, petrol/electricity, airports etc, none of these inventions would survive. And don’t forget the role of people who manufacture, service and maintain these machines which have made our lives much more efficient. Nevertheless, humans have a great capacity for forgetting the impact of these efficiencies, can you imagine how much labour is involved in hand-washing for a family, in walking or horse-riding to the next village or town, or how limited our views would be without access (for some) to the world. We also forget that somebody was responsible for these inventions, beyond providing us with an answer to a quiz question. But someone, or groups of people, had the capacity to first observe a problem, before moving onto solving that problem. This is not just about scientists and engineers, predominantly male, so why would they care about women’s labour at the washtub and mangle?

This raises some interesting questions around the 20th century growth and availability of household appliances, for example, washing machines, tumble driers, hoovers, electric irons and ovens, pressure cookers and crock pots, the list goes on and on. There is no doubt, with these appliances, that women’s labour has been markedly reduced, both temporally and physically and has increased efficiency in the home. But for whose benefit? Has this provided women with more leisure time or is it so that their labour can be harnessed elsewhere? Research would suggest that women are busier than ever, trying to balance paid work, with childcare, with housekeeping etc. So we can we really say that women are more efficient in the 21st century than in previous centuries, it seems not. All that has really happened is that the work they do has changed and in many ways, is less visible.

So what about the growth in technology, not least, generative AI? Am I to believe, as I was told by Tomorrow’s World when growing up, that computers would improve human lives immensely heralding the advent of the ‘leisure age’? Does the increase in generative AI such as ChatGPT, mark a point where most work is made efficient? Unfortunately, I’ve yet to see any sign of the ‘leisure age’, suggesting that technology (including AI) may add different work, rather than create space for humans to focus on something more important.

I have academic colleagues across the world, who think AI is the answer to improving their personal, as well as institutional, efficiency. “Just imagine”, they cry, “you can get it to write your emails, mark student assessment, undertake the boring parts of research that you don’t like doing etc etc”. My question to them is, “what’s the point of you or me or academia?”.

If academic life is easily reducible to a series of tasks which a machine can do, then universities and academics have been living and selling a lie. If education is simply feeding words into a machine and copying that output into essays, articles and books, we don’t need academics, probably another machine will do the trick. If we’re happy for AI to read that output to video, who needs classrooms and who needs lecturers? This efficiency could also be harnessed by students (something my colleagues are not so keen on) to write their assessments, which AI could then mark very swiftly.

All of the above sounds extremely efficient, learning/teaching can be done within seconds. Nobody need read or write anything ever again, after all what is the point of knowledge when you’ve got AI everywhere you look…Of course, that relies on a particularly narrow understanding which reduces knowledge to meaning that which is already known….It also presupposes that everyone will have access to technology at all times in all places, which we know is fundamentally untrue.

So, whatever will we do with all this free time? Will we simply sit back, relax and let technology do all the work? If so, how will humans earn money to pay the cost of simply existing, food/housing/sanitation etc? Will unemployment become a desirable state of being, rather than the subject of long-standing opprobrium? If leisure becomes the default, will this provide greater space for learning, creating, developing, discovering etc. or will technology, fueled by capitalism, condemn us all to mindless consumerism for eternity?

Criminology for all (including children and penguins)!

As a wise woman once wrote on this blog, Criminology is everywhere! a statement I wholeheartedly agree with, certainly my latest module Imagining Crime has this mantra at its heart. This Christmas, I did not watch much television, far more important things to do, including spending time with family and catching up on reading. But there was one film I could not miss! I should add a disclaimer here, I’m a huge fan of Wallace and Gromit, so it should come as no surprise, that I made sure I was sitting very comfortably for Wallace & Gromit: Vengeance Most Fowl. The timing of the broadcast, as well as it’s age rating (PG), clearly indicate that the film is designed for family viewing, and even the smallest members can find something to enjoy in the bright colours and funny looking characters. However, there is something far darker hidden in plain sight.

All of Aardman’s Wallace and Gromit animations contain criminological themes, think sheep rustling, serial (or should that be cereal) murder, and of course the original theft of the blue diamond and this latest outing was no different. As a team we talk a lot about Public Criminology, and for those who have never studied the discipline, there is no better place to start…. If you don’t believe me, let’s have a look at some of the criminological themes explored in the film:

Sentencing Practice

In 1993, Feathers McGraw (pictured above) was sent to prison (zoo) for life for his foiled attempt to steal the blue diamond (see The Wrong Trousers for more detail). If we consider murder carries a mandatory life sentence and theft a maximum of seven years incarceration, it looks like our penguin offender has been the victim of a serious miscarriage of justice. No wonder he looks so cross!

Policing Culture

In Vengeance Most Fowl we are reacquainted with Chief Inspector Mcintyre (see The Curse of the Were-Rabbit for more detail) and meet PC Mukherjee, one an experienced copper and the other a rookie, fresh from her training. Leaving aside the size of the police force and the diversity reflected in the team (certainly not a reflection of policing in England and Wales), there is plenty more to explore. For example, the dismissive behaviour of Mcintyre toward Mukherjee’s training. learning is not enough, she must focus on developing a “copper’s gut”. Mukherjee also needs to show reverence toward her boss and is regularly criticised for overstepping the mark, for instance by filling the station with Wallace’s inventions. There is also the underlying message that the Chief Inspector is convinced of Wallace’s guilt and therefore, evidence that points away from should be ignored. Despite this Mukherjee retains her enthusiasm for policing, stays true to her training and remains alert to all possibilities.

Prison Regime

The facility in which Feathers McGraw is incarcerated is bleak, like many of our Victorian prisons still in use (there are currently 32 in England and Wales). He has no bedding, no opportunities to engage in meaningful activities and appears to be subjected to solitary confinement. No wonder he has plenty of time and energy to focus on escape and vengeance! We hear the fear in the prison guards voice, as well as the disparaging comments directed toward the prisoner. All in all, what we see is a brutal regime designed to crush the offender. What is surprising is that Feathers McGraw still has capacity to plot and scheme after 31 years of captivity….

Mitigating Factors

Whilst Feathers McGraw may be the mastermind, from prison he is unable to do a great deal for himself. He gets round this by hacking into the robot gnome, Norbot. But what of Norbot’s free will, so beloved of Classical Criminology? Should he be held culpable for his role or does McGraw’s coercion and control, renders his part passive? Without, Norbot (and his clones), no crime could be committed, but do the mitigating factors excuse his/their behaviour? Questions like this occur within the criminal justice system on a regular basis, admittedly not involving robot gnomes, but the part played in criminality by mental illness, drug use, and the exploitation of children and other vulnerable people.

And finally:

Above are just some of the criminological themes I have identified, but there are many others, not least what appears to be Domestic Abuse, primarily coercive control, in Wallace and Gromit’s household. I also have not touched upon the implicit commentary around technology’s (including AI’s) tendency toward homogeneity. All of these will keep for classroom discussions when we get back to campus next week 🙂

Embracing Technology in Education: Prof. Ejikeme’s Enduring Influence

Sallek Yaks Musa, PhD, FHEA

When I heard about the sudden demise of one of my professors, I was once again reminded of the briefness and vanity of life —a topic the professor would often highlight during his lectures. Last Saturday, Prof. Gray Goziem Ejikeme was laid to rest amidst tributes, sadness, and gratitude for his life and impact. He was not only an academic and scholar but also a father and leader whose work profoundly influenced many.

I have read numerous tributes to Prof. Ejikeme, each recognizing his passion, dedication, and relentless pursuit of excellence, exemplified by his progression in academia. From lecturer to numerous administrative roles, including Head of Department, Faculty Dean, Deputy Vice Chancellor, and Acting Vice Chancellor, his career was marked by significant achievements. This blog is a personal reflection on Prof. Ejikeme’s life and my encounters with him, first as his student and later as an academic colleague when I joined the University of Jos as a lecturer.

Across social media, in our graduating class group, and on other platforms, I have seen many tributes recognizing Prof. Ejikeme as a professional lecturer who motivated and encouraged students. During my undergraduate studies, in a context where students had limited voice compared to the ‘West,’ I once received a ‘D’ grade in a social psychology module led by Prof. Dissatisfied, I mustered the courage to meet him and discuss my case. The complaint was treated fairly, and the error rectified, reflecting his willingness to support students even when it wasn’t the norm. Although the grade didn’t change to what I initially hoped for, it improved significantly, teaching me the importance of listening to and supporting learners.

Prof. Ejikeme’s classes were always engaging and encouraging. His feedback and responses to students were exemplary, a sentiment echoed in numerous tributes from his students. One tribute by Salamat Abu stood out to me: “Rest well, Sir. My supervisor extraordinaire. His comment on my first draft of chapter one boosts my morale whenever I feel inadequate.”

My interaction with Prof. Ejikeme significantly shaped my teaching philosophy to be student-centered and supportive. Reflecting on his demise, I reaffirmed my commitment to being the kind of lecturer and supervisor who is approachable and supportive, both within and beyond the classroom and university environment.

Prof. Ejikeme made teaching enjoyable and was never shy about embracing technology in learning. At a time when smartphones were becoming more prevalent, he encouraged students to invest in laptops and the internet for educational purposes. Unlike other lecturers who found laptop use during lectures distracting, he actively promoted it, believing in its potential to enhance learning. His forward-thinking approach greatly benefited me and many others.

Building on Prof. Ejikeme’s vision, today’s educators can leverage advancements in technology, particularly Artificial Intelligence (AI), to further enhance educational experiences. AI can personalize learning by adapting to each student’s pace and style, providing tailored feedback and resources. It can also automate administrative tasks, allowing educators to focus more on teaching and student interaction. For instance, AI-driven tools can analyse student performance data to identify learning gaps, recommend personalized learning paths, and predict future performance, helping educators intervene proactively.

Moreover, AI can support academics in research by automating data analysis, generating insights from large datasets, and even assisting in literature reviews by quickly identifying relevant papers. By embracing AI, academics can not only improve their teaching practices but also enhance their research capabilities, ultimately contributing to a more efficient and effective educational environment.

Prof. Ejikeme’s willingness to embrace new technologies was ahead of his time, and it set a precedent for leveraging innovative tools to support and improve learning outcomes. His legacy continues as we incorporate AI and other advanced technologies into education, following his example of using technology to create a more engaging and supportive learning experience.

Over the past six months, I have dedicated significant time to reflecting on my teaching practices, positionality, and the influence of my role as an academic on learners. Prof. Ejikeme introduced me to several behavioural theories in social psychology, including role theory. I find role theory particularly crucial in developing into a supportive academic. To succeed, one must balance and ensure compatible role performance. For me, the golden rule is to ensure that our personal skills, privileges, dispositions, experiences from previous roles, motivations, and external factors do not undermine or negatively impact our role or overshadow our decisions.

So long, Professor GG Ejikeme. Your legacy lives on in the countless lives you touched.

Disclaimer: AI may have been used in this blog.

Is Criminology Up to Speed with AI Yet?

On Tuesday, 20th June 2023, the Black Criminology Network (BCN) together with some Criminology colleagues were awarded the Culture, Heritage, and Environment Changemaker of the Year Award 2023. The University of Northampton Changemaker Awards is an event showcasing, recognising, and celebrating some of the key success and achievements of staff, students, graduates, and community initiatives.

For this award, the BCN, and the team held webinars with a diverse audience from across the UK and beyond to mark the Black History Month. The webinars focused on issues around the ‘criminalisation of young Black males, the adultification of Black girls, and the role of the British Empire in the marking of Queen Elizabeth’s Jubilee.’. BCN was commended for ‘creating a rare and much needed learning community that allows people to engage in conversations, share perspectives, and contextualise experiences.’ I congratulate the team!

The award of the BCN and Criminology colleagues reflects the effort and endeavour of Criminologists to better society. Although Criminology is considered a young discipline, the field and the criminal justice system has always demonstrated the capacity to make sense of criminogenic issues in society and theorise about the future of crime and its administration/management. Radical changes in crime administration and control have not only altered the pattern of some crime, but criminality and human behaviour under different situations and conditions. Little strides such as the installation and use of fingerprints, DNA banks, and CCTV cameras has significantly transformed the discussion about crime and crime control and administration.

Criminologist have never been shy of reviewing, critiquing, recommending changes, and adapting to the ever changing and dynamic nature of crime and society. One of such changes has been the now widely available artificial intelligence (AI) tools. In my last blog, I highlighted the morality of using AI by both academics and students in the education sector. This is no longer a topic of debate, as both academics and students now use AI in more ways than not, be it in reading, writing, and formatting, referencing, research, or data analysis. Advance use of various types of AI has been ongoing, and academics are only waking up to the reality of language models such as Bing AI, Chat GPT, Google Bard. For me, the debate should now be on tailoring artificial intelligence into the curriculum, examining current uses, and advancing knowledge and understanding of usage trends.

For CriminologistS, teaching, research, and scholarship on the current advances and application of AI in criminal justice administration should be prioritised. Key introductory criminological texts including some in press are yet to dedicate a chapter or more to emerging technologies, particularly, AI led policing and justice administration. Nonetheless, the use of AI powered tools, particularly algorithms to aid decision making by the police, parole, and in the courts is rather soaring, even if biased and not fool-proof. Research also seeks to achieve real-world application for AI supported ‘facial analysis for real-time profiling’ and usage such as for interviews at Airport entry points as an advanced polygraph. In 2022, AI led advances in the University of Chicago predicted with 90% accuracy, the occurrence of crime in eight cities in the US. Interestingly, the scholars involved noted a systemic bias in crime enforcement, an issue quite common in the UK.

The use of AI and algorithms in criminal justice is a complex and controversial issue. There are many potential benefits to using AI, such as the ability to better predict crime, identify potential offenders, and make more informed decisions about sentencing. However, there are also concerns about the potential for AI to be biased or unfair, and to perpetuate systemic racism in the criminal justice system. It is important to carefully consider the ethical implications of using AI in criminal justice. Any AI-powered system must be transparent and accountable, and it must be designed to avoid bias. It is also important to ensure that AI is used in a way that does not disproportionately harm marginalized communities. The use of AI in criminal justice is still in its early stages, but it has the potential to revolutionize the way we think about crime and justice. With careful planning and implementation, AI can be used to make the criminal justice system fairer and more effective.

AI has the potential to revolutionize the field of criminology, and criminologists need to be at the forefront of this revolution. Criminologists need to be prepared to use AI to better understand crime, to develop new crime prevention strategies, and to make more informed decisions about criminal justice. Efforts should be made to examine the current uses of AI in the field, address biases and limitations, and advance knowledge and understanding of usage trends. By integrating AI into the curriculum and fostering a critical understanding of its implications, Criminologists can better equip themselves and future generations with the necessary tools to navigate the complex landscape of crime and justice. This, in turn, will enable them to contribute to the development of ethical and effective AI-powered solutions for crime control and administration.

Rise of the machines: fall of humankind

May is a pretty important month for me: Birthdays, graduations, what feels like a thousand Bank Holidays, marking deadlines, end of Semester 2 and potentially some annual leave (if I haven’t crashed and crumbled beforehand). And all of the above is impacted by, or reliant on the use of machines. Their programming, technology, assistance, and even hindrance will all have a large impact on my month of May and what I am finding, increasingly so, is that the reliance on the machines for pretty much everything in relation to my list above is making my quite anxious for the days to come…

Employment, education, shopping, leisure activities are all reliant on trusty ol’ machines and technology (which fuels the machines). The CRI1003 cohort can vouch, when I claim that machines and technology, in relation to higher education, can be quite frustrating. Systems not working, or going slow, connecting and disconnecting, machines which need updates to process the technology. They are also fabulous: online submissions, lecture slides shown across the entirety of the room not just one teeny tiny screen, remote working, access to hundreds of online sources, videos, typing, all sorts! I think the convoluted point I am trying to get too is that the rise of the reliance on machines and technology has taken humankind by storm, and it has come with some frustrations and some moments of bliss and appreciation. But unfortunately the moments of frustration have become somewhat etched onto the souls of humankind… will my laptop connect? Will my phone connect to the internet? Will my e-tickets download properly? Will my banking app load?

Why am I pondering about this now?

I am quite ‘old school’ in relation to somethings. I am holding on strong to paper books (despite the glowing recommendations from friends on Kindles and E-readers), I use cash pretty much all the time (unless it is not accepted in which case it is a VERY RARE occasion that the business will receive my custom), and I refuse to purchase a new phone or update the current coal fuelled device I use (not literally but trying to be creative). Why am I so committed to refusing to be swept along in the rise of the machines? Simple: I don’t trust them.

I have raised views about using card/contactless to purchase goods elsewhere and I fully appreciate I am in a minority when it comes to the reliance on cash. However, what happens when the card reader fails? What happens when the machine needs an update which will take 40mins and the back up machine also requires an update? Do traders and businesses just stop? What happens when the connection is weak, or the connection fails? What happens when my e-tickets don’t load or my reservation which went through on my end, didn’t actually go through on their end? See, if I had spoken to someone and got their name and confirmed the reservation, or had the physical tickets, or the cash: then I would be ok. The reliance on machines removes the human touch. And often adds an element of confusion when things go wrong: human error we can explain, but machine error? Harder to explain unless you’re in the know.

May should be a month of celebrations and joy: Birthdays, graduations, end of the Semester, for some students the end of their studies. But all of this hinders of machines. Yes, it requires humans to organise and use the technology but very little of it is actually reliant on humans themselves. I am oversimplifying. But I am also anxious. Anxious that a number of things we enjoy, rely on and require for daily life is becoming more and more machine-like by the day. I have an issue, can I talk to a human- nope! Talk to a bot first then see if a human is needed. So much of our lives are becoming reliant on machines and I’m concerned it means more will go wrong…